PointSplit: Towards On-device 3D Object Detection with Heterogeneous Low-power Accelerators

February 25, 2025

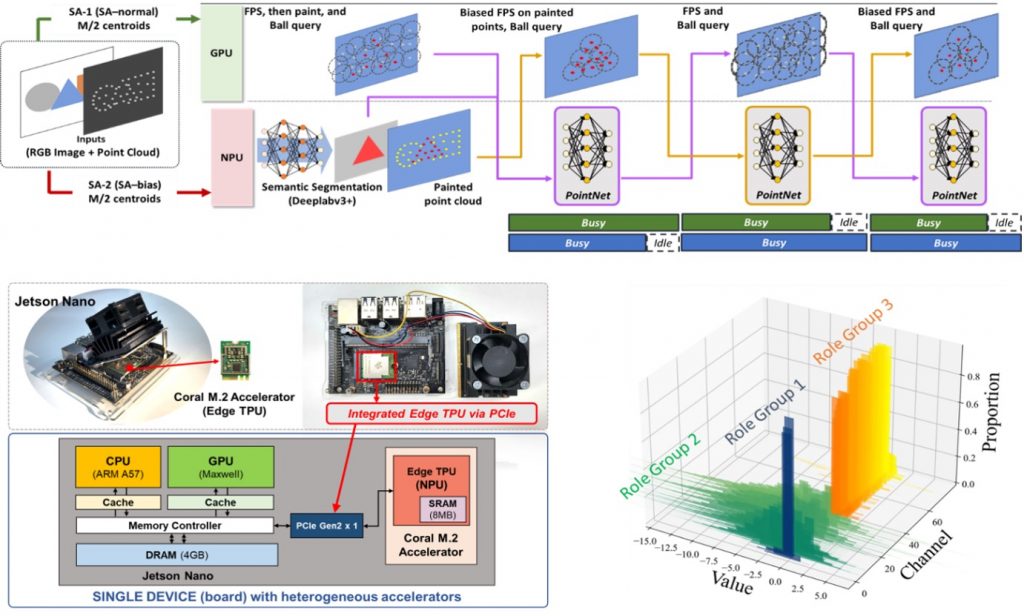

In a recent paper published at IPSN 2023, Prof. Hyung-Sin Kim’s team proposed PointSplit, a novel 3D object detection framework for multi-accelerator edge devices. PointSplit optimized the 3D object detection model architecture to maximize the utilization of mobile GPU and NPU. Through system-algorithm co-optimization, Prof. Kim’s team demonstrates that PointSplit on a multi-accelerator device is 24.7× faster with similar accuracy compared to the full-precision, 2D-3D fusion-based 3D detector on a GPU-only device. Through this work, prof. Kim’s team shows the potential of recent edge devices equipped with heterogeneous low-power processors. Moreover, the team provides the open implementation for other researchers to run more various complex tasks on the new class of edge devices.

[Potential of Multi-accelerator Edge Devices]

Running deep learning models on resource-constrained devices has drawn significant attention due to its fast response, privacy preservation, and robust operation regardless of Internet connectivity [1, 2, 3]. While these devices already cope with various intelligent tasks, the latest edge devices that are equipped with multiple types of low-power accelerators (i.e., both mobile GPU and NPU) can bring another opportunity; 3D object detection that used to be too heavy for an edge device in the single-accelerator world might become viable in the upcoming heterogeneous-accelerator world.

[Challenges]

Even with the latest edge devices containing both GPU and NPU, enabling on-device 3D object detection without sacrificing accuracy is challenging. (1) 3D object detection is typically designed as a sequential process [4], making it hard to utilize GPU and NPU in parallel. (2) Since GPU and NPU have different strengths, a 3D object detection model should be analyzed thoroughly to distribute its computation to the two processors synergistically. (3) Fusing 2D vision information with a 3D point cloud can improve detection performance [5, 6, 7] but makes the computational burden even heavier on the edge devices. (4) Quantization is necessary to reduce computation as well as to utilize NPU but given that 3D object detection is a sophisticated task, a naïve approach would significantly degrade the accuracy.

[Proposal]

To tackle the issues, Prof. Kim’s team proposed PointSplit, which includes 3 following main components: (1) 2D-semantics aware biased farthest point sampling, (2) Parallelized 3D feature extraction and (3) Role-based groupwise quantization. The team implemented PointSplit on a test resource-constrained platform by combining NVIDIA Jetson Nano (including mobile GPU) and Google EdgeTPU (an NPU type). Extensive experiments on the test platform verify the effectiveness of PointSplit in terms of both accuracy and latency.

Figure. PointSplit’s hardware platform, parallelized pipeline, and role-based quantization

Keondo Park, You Rim Choi, Inhoe Lee, and Hyung-Sin Kim.

IPSN 2023 (ACM/IEEE International Conference on Information Processing in Sensor Networks). https://dl.acm.org/doi/abs/10.1145/3583120.3587045